TL;DR: Testfully is a cloud-based API integration testing and API monitoring platform. Users define their test cases and runs the tests on demand or in background. Users can opt-in to be notified when tests fail. Testfully saves software teams time and money by eliminating the need to write, maintain and run end to end tests.

Videos

If you’re looking for a quick introduction to Testfully, please watch the below video.

for a more in-depth demo of the dashboard and major features, please watch the below video or read the text version.

Introduction

Testfully is a generic API integration testing and API monitoring platform with focus on simplicity, reliability and robustness. In nutshell, Testfully sends HTTP requests to your API and verifies the response based on the user-defined acceptance criteria. Users can run test cases in browser or leverage Testfully’s servers.

Testfully is being designed with flexibility in mind and does not expect your API to follow certain practices. We consider Testfully as a No-code tool for engineering teams of any size and our goal is to do all the heavy-lifting around automated API testing & monitoring so you can focus on building your product and releasing them to your customer faster.

We strongly believe that software testing (including APIs) is a team effort and our platform is built from the ground up to serve the entire engineering teams and not only back-end engineers. We envisioned Testfully to be used by:

- Back-end engineers for verification of APIs during development and while deployed in various environments.

- QA engineers to define and run happy & unhappy test cases

- DevOps engineer to monitor availability of the production APIs

- Front-end and mobile engineers to catch breaking API changes early

With the introduction out of our way, let’s jump straight into tests, Testfully’s core feature and a way to validate correctness of your API.

Test Cases

Test is the core feature of Testfully and anything else is simply some kind of abstraction over tests. Test cases are simply an automated way to validate correctness of your API. What makes tests special is the ability to include multiple steps (http requests) so you’re not limited to a single request / response paradigm anymore.

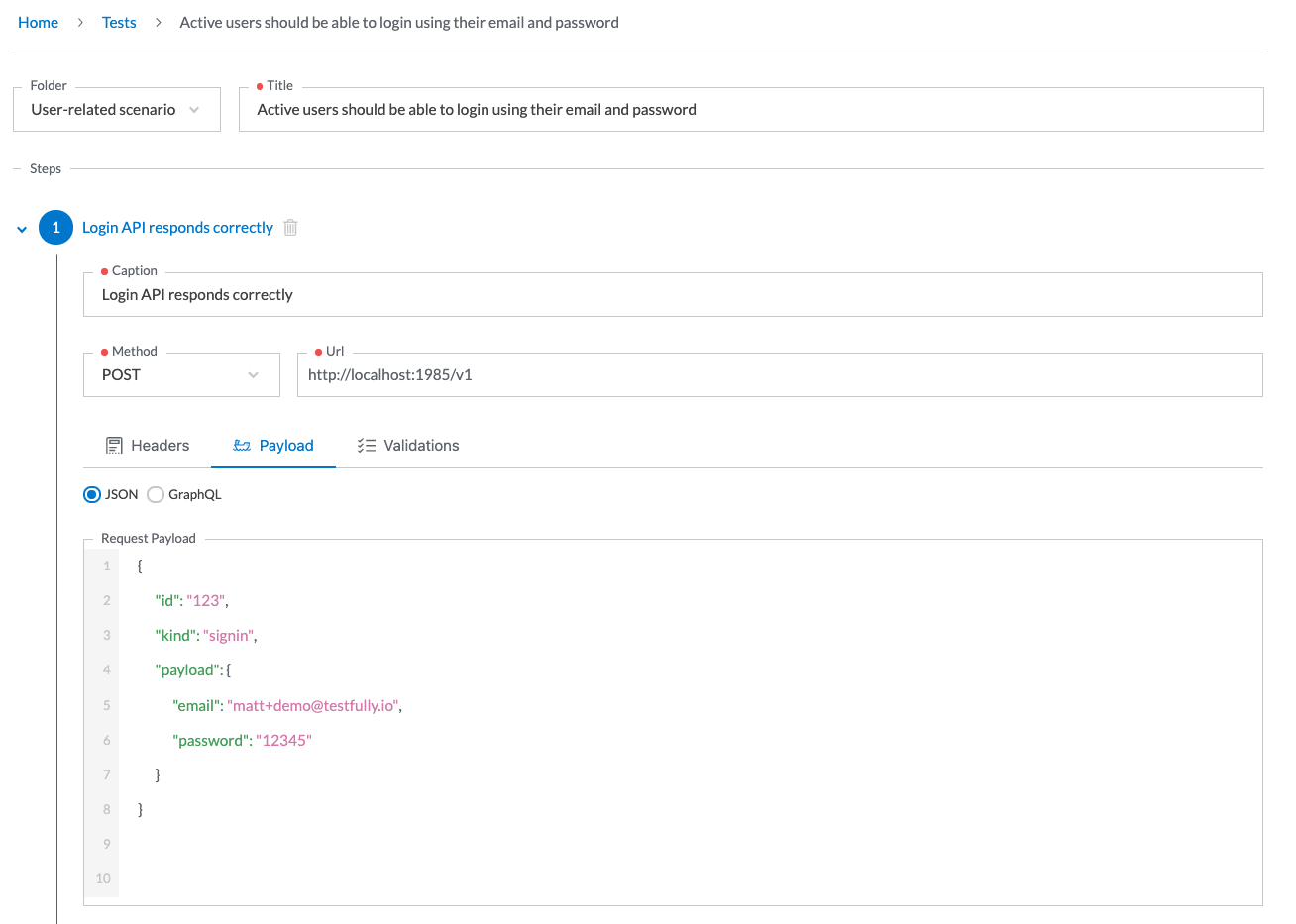

As mentioned, Steps are the actual HTTP requests Testfully sends on your behalf and they come with a great deal of flexibility and feature.

- HTTP Method & URL of each step can be defined independent of other steps

- Unlimited Request Headers can be added to any of the steps

- JSON & GraphQL request bodies are supported (Forms and other types will be added soon)

- Response Status, Time, Headers and Body of each step can be validated automatically by Testfully for you

- Auto-generated fake data can be included in Url, Request Headers and Request Body

- Reusable values (like access tokens) can be defined and added to URL, Request Headers and Request Body

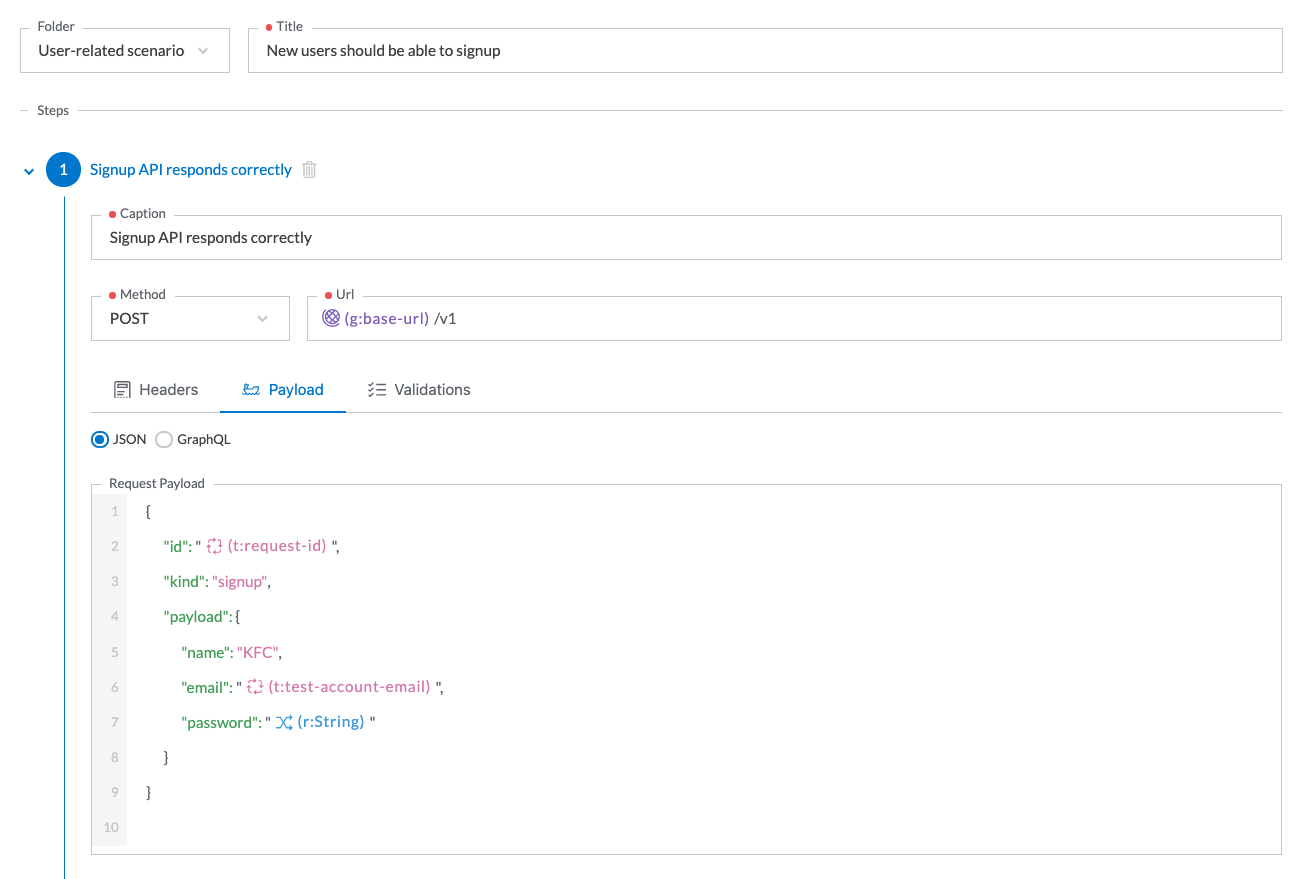

The screenshot below shows a test case for login API.

Run in browser or server

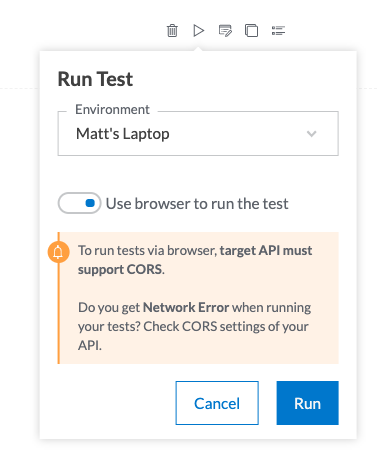

When it comes to run your integration tests, you have two options. You can either run them in browser or leverage Testfully’s servers to run your test cases.

- Running test cases in browser is great while developing your API locally.

- Running test cases in our server gives you access to historical data about your test cases as we store test results securely.

The screenshot below shows how you can run test cases in browser.

Configs

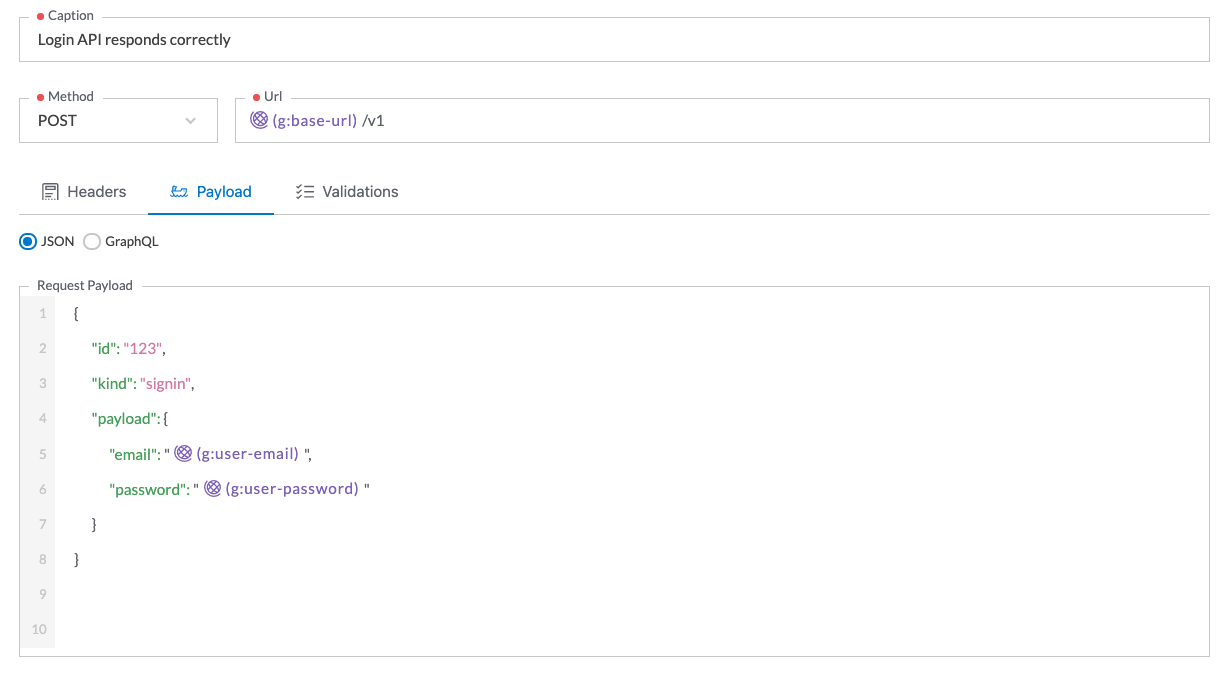

Configs are reusable values that can be embedded in test cases. They allow users to define values once and reuse them within different tests, eliminating unnecessary copy & paste of the values across multiple tests. When it comes to updating configs, you can update values in one place and have test cases use the updated config values instantly.

Some use-cases of using configs are:

- URL of your API as it’s something that you need for all of your test cases

- Access Token to access your API

- Username & Password for a valid & registered user in your system.

Configs can be embedded in URLs, Request Headers and Request Bodies of tests steps using (g:) pattern.

The screenshot below shows how “base-url”, “user-email” and “user-password” configs are added to the URL and request body of a test step.

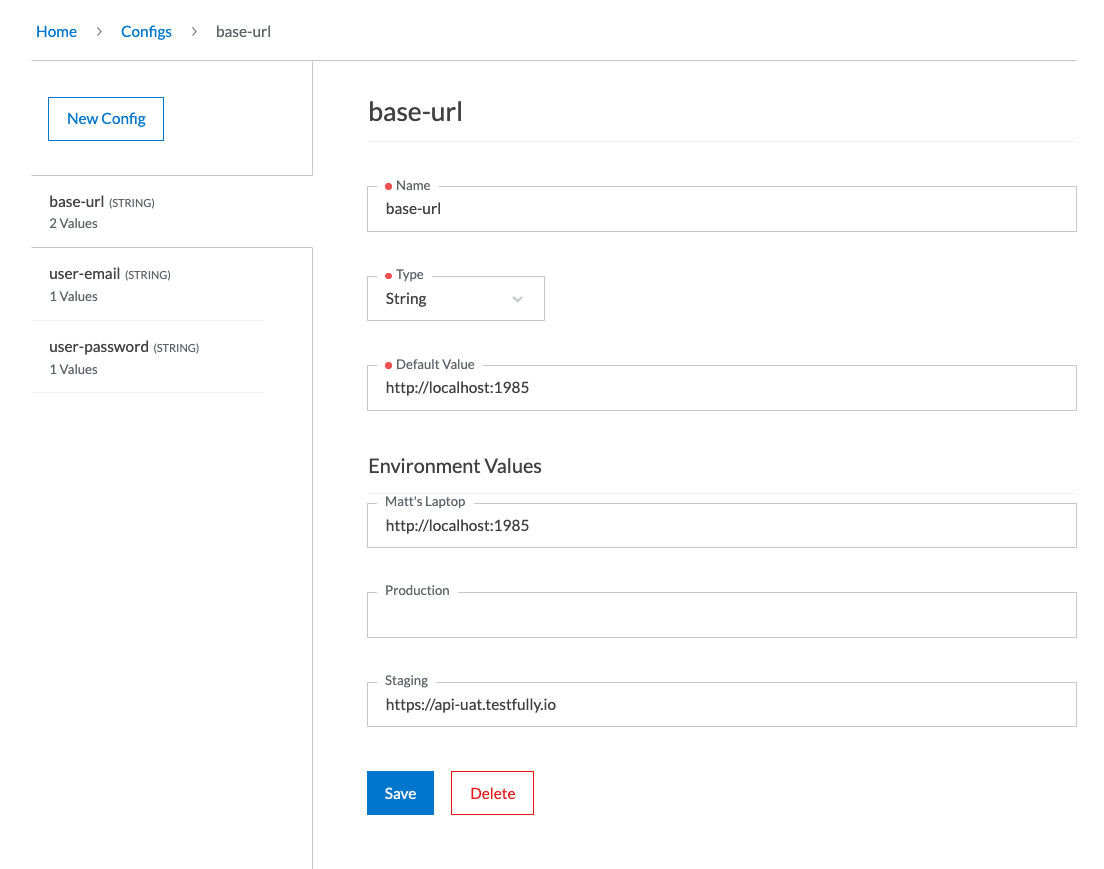

And the screenshot below shows how config values can be added to your account.

Environments

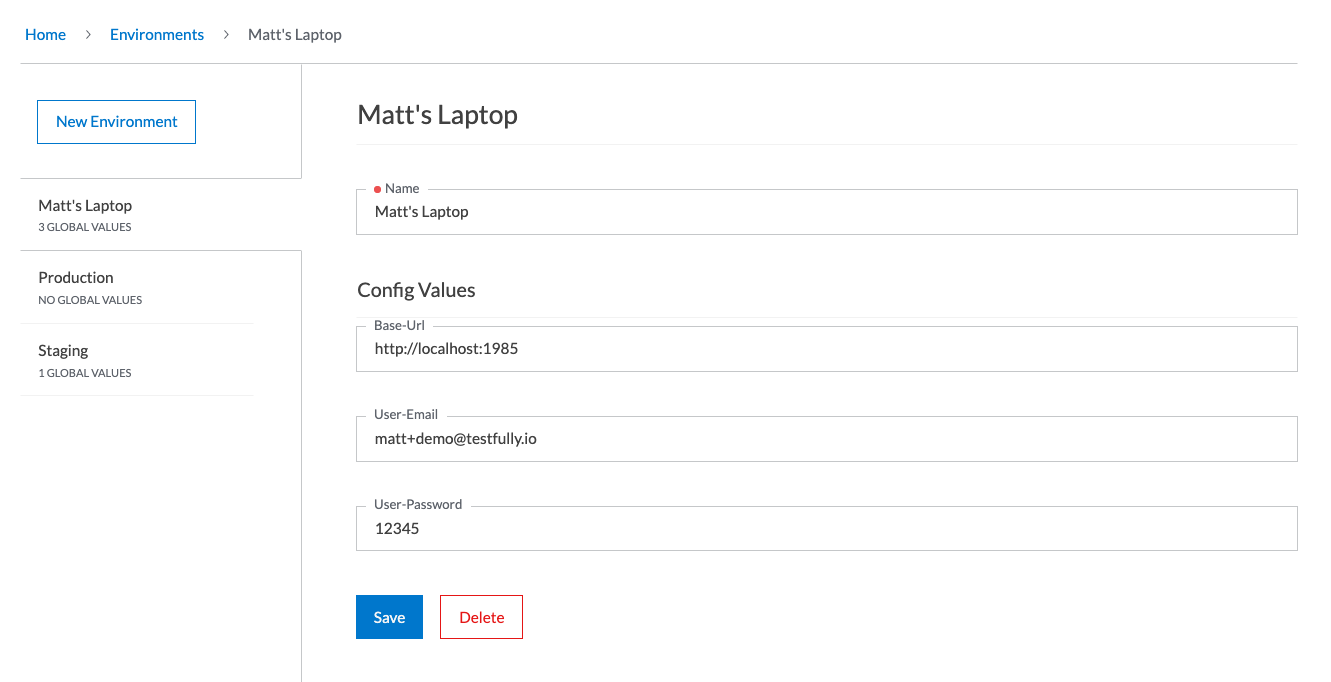

Please don’t confuse the term “Environment” with “Environment Variables” in programming. Testfully environments are simply a way to name your hosted API. Engineers use names like Production, Prod, UAT, Staging to refer to their hosted code. In Testfully, test cases are executed against environments.

Config values can also be set per environment. You can have as many as environments you wish so don’t hesitate to add your non-production environments as well as the production and run your tests against those environments.

The screenshot below shows how 3 different environments (including development laptop) with config values for each of them.

Data Generators & Templates

Imagine testing signup flow of your API. You need at least a valid email address every time, something that you have not used it beforehand. We have also been there and that’s why Testfully supports generating random data on your behalf. As matter of fact, you can include random emails, text and numbers (with many more formats of data to be added in near future) to URL, Request Headers and Body of steps within test cases using (r:) tokens.

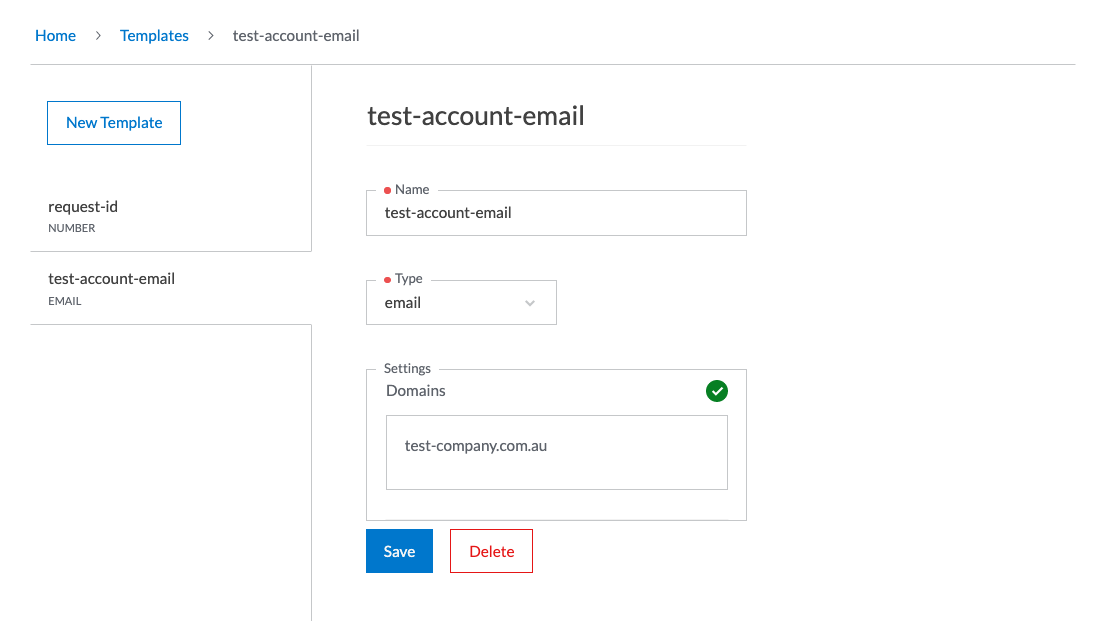

We took it one step further and let you define your own random data generators (without writing a single line of code) and use them in your test cases. Next time you need an email address with your company domain, go ahead and create a random email template for it and reuse across your test cases. Like random data generators, templates can be embedded within test steps using (t:) token.

The screenshot below shows how fake data generators can be included in Request Body.

and the screenshot below shows an example data template for a request id.

References

Configs are a way to store reusable values but what if you don’t know the actual value prior to the execution of a test? Say you need a test to verify that a new user can login post sign up. To define a test like this you need to first register a new user using a randomly generated email and password and use those information to login. Referencing feature is meant to solve this problem. Using this feature you can reference values generated by random data generators, templates and request header, request body, response header and response body of steps prior to any step.

Collections

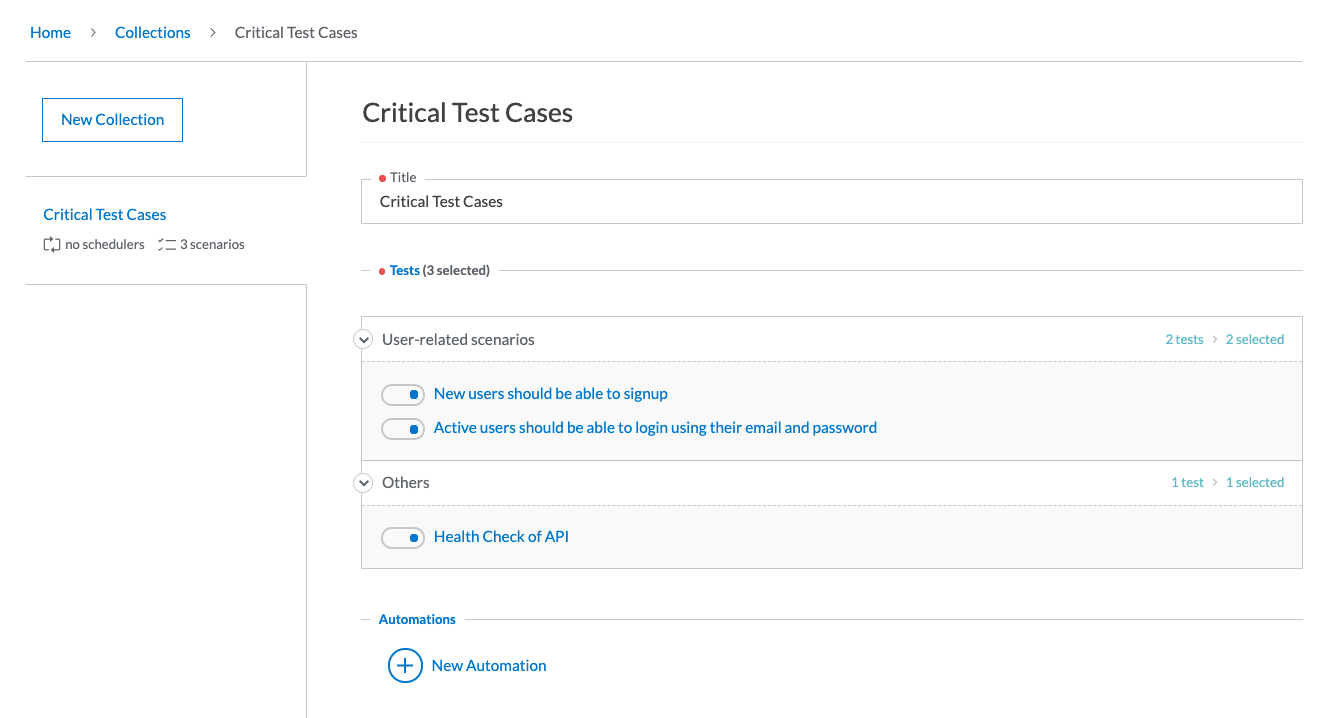

Collections are nothing but a way to group relevant test cases and run them simultaneously. A collection must have at least one test case and there is no max on it. On the other hand, a test case can be also included in as many as collections you may wish. You can run collections against any environment or let Testfully run them in background for you (read below for more information on that).

The example below shows a collection with three included test case.

Automations & Alerts

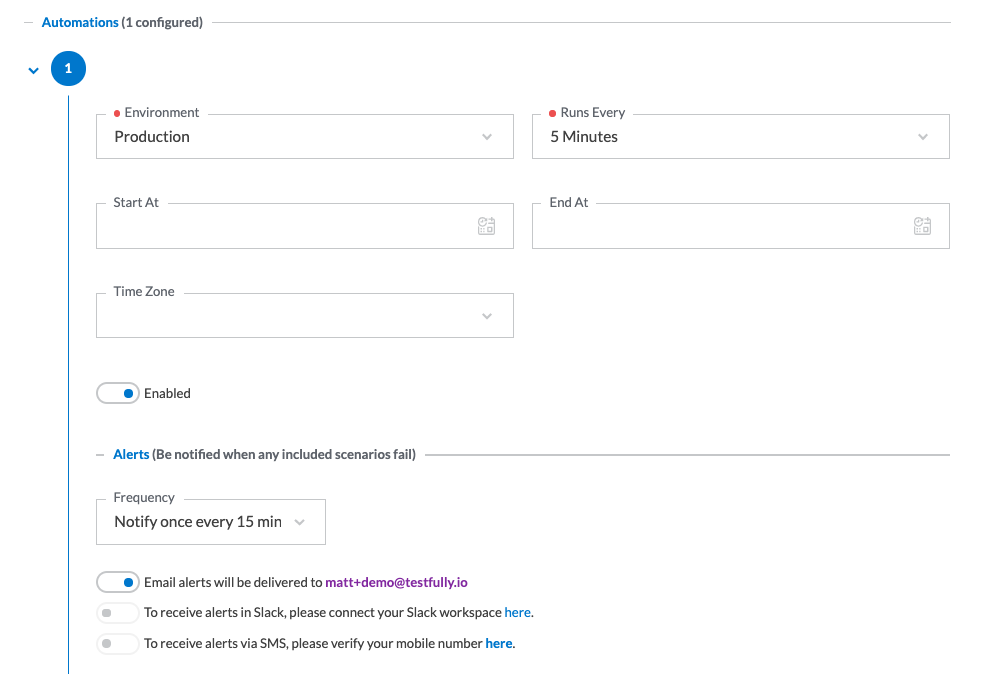

Automation is our solution to API monitoring. Using automations feature, you can run collections in background. Automations are attached to the collections and a collection can have zero or more automations. Automations simply run your test cases simultaneously against the selected environment within the provided time frame and in provided frequency.

Software teams enable alerts on automations to be notified when any of the tests within collections fail. We currently deliver alerts via SMS, e-mail and Slack messages with plans to introduce mobile push notification, Discord, Web Hooks and Teams in future.

The screenshot below shows a configured automation to run test cases every 5 minutes and to notify users about failed tests every 15 minutes via email.

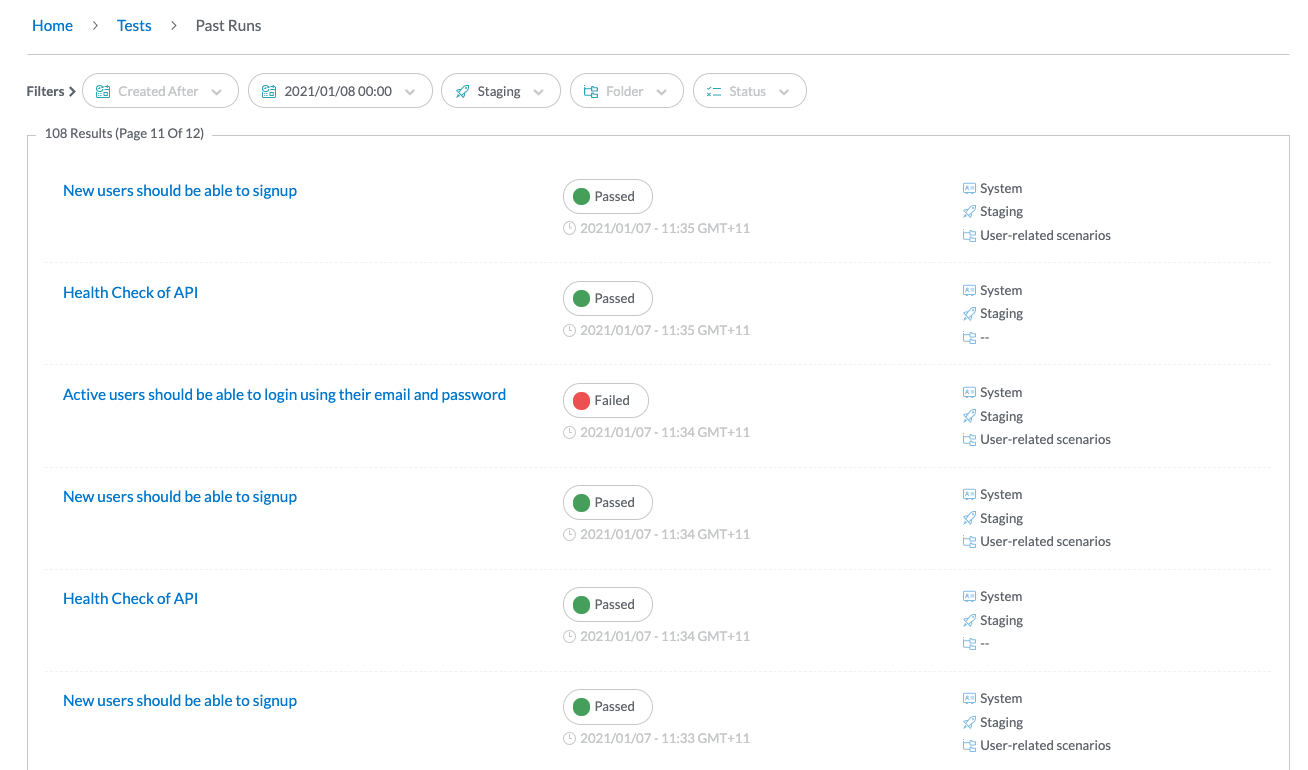

Archive

Not only we can run your test cases for you, but also we capture the results for you and make them available via our dashboard. That’s the kind of information you need to debug issues. Our archive contains executions of all of the test cases, steps within tsts, collections and automations. We have included a set of filtering options for you so you can narrow down results to certain time frame, environment and test case. Using our archive, you can go back in time and see behaviour of your API at any given time.

Reports (coming soon)

Wouldn’t be nice if you had a way to see the average response time of your login endpoint during the last 90 days and measure the impact of your recent DB optimisation on that? This and many other similar questions came to our mind while building and maintaining APIs in production and that’s why we thought it would be great to give our users a way to visualise the numbers. Using our reporting dashboard, you can turn historical data about your API into insights and take data-oriented actions.